I’m in awe. There’s a (family of) programming languages which solves everything. Really.

- it works on the JVM, V8, and CLR, and it interoperates with what already exists

- it’s efficient, it’s dynamic, and it has parallelism built in (threaded or cooperative)

- it’s so malleable, that any sort of DSL can trivially be created on top of it

As this fella says at this very point in his video… State. You’re doing it wrong.

I’ve been going about programming in the wrong way for decades (as a side note: the Tcl language did get it right, up to a point, despite some other troublesome shortcomings).

The language I’m talking about re-uses the best of what’s out there, and even embraces it. All the existing libraries in JavaScript can be used when running in the browser or in Node.js, and similarly for Java or C# when running in those contexts. The VM’s, as I already mentioned also get reused, which means that decades of research and optimisation are taken advantage of.

There’s even an experimental version of this (family of) programming languages for Go, so there again, it becomes possible to add this approach to whetever already exists out there, or is being introduced now or in the future.

Due to the universal reach of JavaScript these days, on browsers, servers, and even on some embedded platforms, that really has most interest to me, so what I’ve been putting my teeth into recently is “ClojureScript”, which specifically targets JavaScript.

Let me point out that ClojureScript is not another “pre-processor” like CoffeScript.

“State. You’re doing it wrong.”

As Rich Hickey, who spoke those words in the above video quickly adds: “which is ok, because I was doing it wrong too”. We all took a wrong turn a few decades ago.

The functional programming (FP) people got it right… Haskell, ML, that sort of thing.

Or rather: they saw the risks and went to a place where few people could follow (monads?).

![FP is for geniuses.png FP is for geniuses]()

What Clojure and ClojureScript do, is to bring a sane level of FP into the mix, with “immutable persistent datastructures”, which makes it all very practical and far easier to build with and reason about. Code is a transformation: take stuff, do things with it, and return derived / modified / updated / whatever results. But don’t change the input data.

Why does this matter?

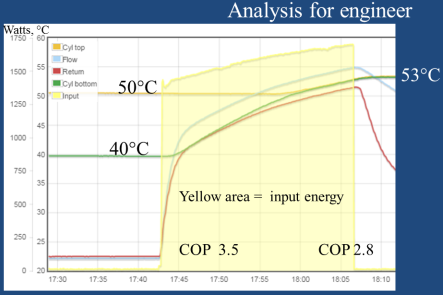

Let’s look at a recent project taking the world by storm: React, yet another library for building user interfaces (in the browser and on mobile). The difference with AngularJS is the conceptual simplicity. To borrow another image from a similar approach in CycleJS:

![Screen Shot 2015-08-16 at 16.08.35.png Screen Shot 2015 08 16 at 16 08 35]()

Things happen in a loop: the computer shows stuff on the screen, the user responds, and the computer updates its state. In a talk by CycleJS author Andre Staltz, he actually goes so far as treat the user as a function: screen in, key+mouse actions out. Interesting concept!

Think about it:

- facts are stored on the disk, somewhere on a network, etc

- a program is launched which presents (some of it) on the screen

- the user interface leads us, the monkeys, to respond and type and click

- the program interprets these as intentions to store / change something

- it sends out stuff to the network, writes changes to disk (perhaps via a database)

- these changes lead to changes to what’s shown on-screen, and the cycle repeats

Even something as trivial as scrolling down is a change to a scroll position, which translates to a different part of a list or page being shown on the screen. We’ve been mixing up the view side of things (what gets shown) with the state (some would say “model”) side, which in this case is the scroll position – a simple number. The moment you take them apart, the view becomes nothing more than a function of that value. New value -> new view. Simple.

Nowhere in this story is there a requirement to tie state into the logic. It didn’t really help that object orientation (OO) taught us to always combine and even hide state inside logic.

Yet I (we?) have been programming with variables which remember / change and loops which iterate and increment, all my life. Because that’s how programming works, right?

Wrong. This model leads to madness. Untraceable, undebuggable, untestable, unverifiable.

In a way, Test-Driven-Design (TDD) shows us just how messy it got: we need to explicitly compare what a known input leads to with the expected outcome. Which is great, but writing code which is testable becomes a nightmare when there is state everywhere. So we invented “mocks” and “spies” and what-have-you-not, to be able to isolate that state again.

What if everything we implemented in code were easily reducible to small steps which cleanly compose into larger units? Each step being a function which takes one or more values as state and produces results as new values? Without side-effects or state variables?

Then again, purely functional programming with no side-effects at all is silly in a way: if there are zero side-effects, then the screen wouldn’t change, and the whole computation would be useless. We do need side-effects, because they lead to a screen display, physical-computing stuff such as motion & sound, saved results, messages going somewhere, etc.

What we don’t need, is state sprinkled across just about every single line of our code…

To get back to React: that’s exactly where it revolutionises the world of user interfaces. There’s a central repository of “the truth”, which is in fact usually nothing more than a deeply nested JavaScript data structure, from which everything shown on the web page is derived. No more messing with the DOM, putting all sorts of state into it, having to update stuff everywhere (and all the time!) for dynamic real-time apps.

React (a.k.a. ReactJS) treats an app as a pipeline: state => view => DOM => screen. The programmer designs and writes the first two, React takes care of the DOM and screen.

I’ll get back to ClojureScript, please hang in there…

What’s missing in the above, is user interaction. We’re used to the following:

mouse/keyboard => DOM => controller => state

That’s the Model-View-Controller (MVC) approach, as pioneered by Smalltalk in the 80’s. In other words: user interaction goes in the opposite direction, traversing all those steps we already have in reverse, so that we end up with modified state all the way back to the disk.

This is where AngularJS took off. It was founded on the concept of bi-directional bindings, i.e. creating an illusion that variable changes end up on the screen, and screen interactions end up back in those same variable – automatically (i.e. all taken care of by Angular).

But there is another way.

Enter “reactive programming” (RP) and “functional reactive programming” (FRP). The idea is that user interaction still needs to be interpreted and processed, but that the outcome of such processing completely bypasses all the above steps. Instead of bubbling back up the chain, we take the user interaction, define what effect it has on the original central-repository-of-the-truth, period. No figuring out what our view code needs to do.

So how do we update what’s on screen? Easy: re-create the entire view from the new state.

That might seem ridiculously inefficient: recreating a complete screen / web-page layout from scratch, as if the app was just started, right? But the brilliance of React (and several designs before it, to be fair) is that it actually manages to do this really efficiently.

Amazingly so in fact. React is faster than Angular.

Let’s step back for a second. We have code which takes input (the state) and generates output (some representation of the screen, DOM, etc). It’s a pure function, i.e. it has no side effects. We can write that code as if there is no user interaction whatsoever.

Think – just think– how much simpler code is if it only needs to deal with the one-way task of rendering: what goes where, how to visualise it – no clicks, no events, no updates!

Now we need just two more bits of logic and code:

we tell React which parts respond to events (not what they do, just that they do)

separately, we implement the code which gets called whenever these events fire, grab all relevant context, and report what we need to change in the global state

That’s it. The concepts are so incredibly transparent, and the resulting code so unbelievably clean, that React and its very elegant API is literally taking the Web-UI world by storm.

Back to ClojureScript

So where does ClojureScript fit in, then? Well, to be honest: it doesn’t. Most people seem to be happy just learning “The React Way” in normal main-stream JavaScript. Which is fine.

There are some very interesting projects on top of React, such as Redux and React Hot Loader. This “hot loading” is something you have to see to believe: editing code, saving the file, and picking up the changes in a running browser session without losing context. The effect is like editing in a running app: no compile-run-debug cycle, instant tinkering!

Interestingly, Tcl also supported hot-loading. Not sure why the rest of the world didn’t.

Two weeks ago I stumbled upon ClojureScript. Sure enough, they are going wild over React as well (with Om and Reagent as the main wrappers right now). And with good reason: it looks like Om (built on top of React) is actually faster than React used from JavaScript.

The reason for this is their use of immutable data structures, which forces you to not make changes to variables, arrays, lists, maps, etc. but to return updated copies (which are very efficient through a mechanism called “structural sharing”). As it so happens, this fits the circular FRP / React model like a glove. Shared trees are ridiculously easy to diff, which is the essence of why and how React achieves its good performance. And undo/redo is trivial.

Hot-loading is normal in the Clojure & ClojureScript world. Which means that editing in a running app is not a novelty at all, it’s business as usual. As with any Lisp with a REPL.

Ah, yes. You see, Clojure and ClojureScript are Lisp-like in their notation. The joke used to be that LISP stands for: “Lots of Irritating Little Parentheses”. When you get down to it, it turns out that there are not really much more of those than parens / braces in JavaScript.

But notation is not what this is all about. It’s the concepts and the design which matter.

Clojure (and ClojureScript) seem to be born out of necessity. It’s fully open source, driven by a small group of people, and evolving in a very nice way. The best introduction I’ve found is in the first 21 minutes of the same video linked to at the start of this post.

And if you want to learn more: just keep watching that same video, 2:30 hours of goodness. Better still: this 1 hour video, which I think summarises the key design choices really well.

No static typing as in Go, but I found myself often fighting it (and type hints can be added back in where needed). No callback hell as in JavaScript & Node.js, because Clojure has implemented Go’s CSP, with channels and go-routines as a library. Which means that even in the browser, you can write code as if there where multiple processes, communicating via channels in either synchronous or asynchronous fashion. And yes, it really works.

All the libraries from the browser + Node.js world can be used in ClojureScript without special tricks or wrappers, because – as I said – CLJ & CLJS embrace their host platforms.

The big negative is that CLJ/CLJS are different and not main-stream. But frankly, I don’t care at this point. Their conceptual power is that of Lisp and functional programming combined, and this simply can’t be retrofitted into the popular languages out there.

A language that doesn’t affect the way you think about programming, is not worth knowing

— Alan J. Perlis

I’ve been watching many 15-minute videos on Clojure by Tim Baldridge (it costs $4 to get access to all of them), and this really feels like it’s lightyears ahead of everything else. The amazing bit is that a lot of that (such as “core.async”) catapults into plain JavaScript.

As you can probably tell, I’m sold. I’m willing to invest a lot of my time in this. I’ve been doing things all wrong for a couple of decades (CLJ only dates from 2007), and now I hope to get a shot at mending my ways. I’ll report my progress here in a couple of months.

It’s not for the faint of heart. It’s not even easy (but it is simple!). Life’s too short to keep programming without the kind of abstractions CLJ & CLJS offer. Eh… In My Opinion.